NVIDIA’s business breadth and focus has evolved over the years. Starting out as a graphics accelerator chip vendor (we didn’t even call them GPUs back then!) nearly 30 years ago, the company now has it fingers in virtually every corner of the world’s high-performance computing markets. The pace of expansion should only grow in the near future, thanks to the recent substantial acquisition of Mellanox and the huge, still-possible impending acquisition of ARM.

The vibe and messaging of the annual GTC reflected NVIDIA’s evolution, with GPU compute, supercomputing, automotive and machine learning taking an ever-increasing share of the limelight. Still though, a new graphics chip architecture or GPU product launch always managed to get its share of airtime. Not so this year, as the launch of a wide range of new GPUs — from fixed to mobile client to datacenter — did not get so much as a mention in CEO Jensen Huang’s 90-minute keynote. For good reason, it turns out, as even 90 minutes proved precious little time to cover the impressive breadth of NVIDIA’s initiatives across the computing landscape.

While Huang’s keynote may not have focused on GPUs per se, it did present an exciting, holistic vision for the future of 3D visual computing built on the company’s expanding line of RTX GPUs: Omniverse, a centralized platform enabling global collaboration and encompassing the breadth of CAD-oriented workflows, particularly in AEC, design, and manufacturing.

We discuss the new RTX products in part 2 of our report.

Introducing: NVIDIA’s Omniverse

While the new RTX-branded products didn’t get attention individually in the keynote, the RTX technology foundation of those GPUs did, as a key component to an expansive and ambitious initiative, Omniverse. A virtual, cloud-centric 3D design environment, Omniverse enables remote visualization, collaboration, and simulation seamlessly across multiple geographic sites and applications.

Omniverse creates a remote, virtual real-time collaboration environment, built on popular applications and standard model descriptions (image source: NVIDIA).

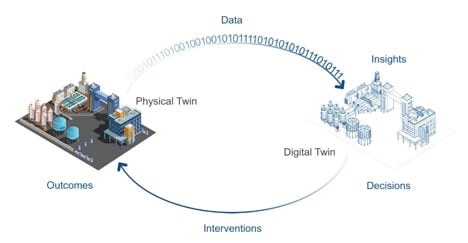

The Omniverse platform is founded on a centralized, cloud-based topology, where one model, one database, in one format is shared, accessed, modified, and verified by staff that may potentially be scattered across the globe. As with today’s 3D CAD methodologies, the platform’s goal is to enable creation of a true digital twin, a virtual replica with associated design information, perfectly faithful to the eventual physical implementation.

Only one twin. One critical attribute of Omniverse — and a priority goal for moving to a centralized computing environment — is that in this case, there is specifically one twin mirroring the eventual, or even concurrent, physical implementation. That means all contributors operate on the one database — no more multiple copies scattered across many users and locations, wasted transfer time and bandwidth, and error-prone coherency issues while managing disparate versions. Whatever’s there is guaranteed to be the latest, most up-to-date version of the database.

Open standards. A second attribute, arguably the linchpin to the efficacy and appeal of the approach, is the platform’s reliance on a common, open standards-compliant model description, one that can be created and edited by popular CAD tools, the same tools that in a traditional client-side environment would mean umpteen different versions of — and conversions between — models and ancillary data formats.

Centralized computing environment. Finally, Omniverse rests on a centralized computing topology, with users accessing the environment remotely via datacenter-resident workstations, either virtually or physically configured. All the goodness and appeal of centralized computing comes in tandem, covered extensively in the column over recent years (here, here, and here). With the combination of the shared Omniverse, centralized computing environment, NVIDIA and its partners look to create the virtual, cloud 3D design environment that some — with good reason — would view as a holy grail achievement in modern 3D design, addressing many of the thorny problems plaguing traditional client-centric topologies, like: big data, security, 24/7 remote access and application and data interoperability.

A Long-Time Coming, For Good Reason

GTC ’21 by no means marks the first mention of Omniverse. As is so often the case with such a massive an undertaking that requires coordination of so many components, as well as the participation and contribution from the full breadth of the CAD ecosystem, Omniverse has been under development for years. Its origin can be traced back to internal tools that were first exposed externally back at GTC ’17 in Project Holodeck. Borrowing the name from Star Trek, Project Holodeck collaborators could interact remotely with the same virtual model and each other to review designs and test for optimal ergonomic form and function.

An early demo of the VR-oriented Project Holodeck (image source: NVIDIA).

Project Holodeck’s collaborative capabilities were somewhat entwined — and in retrospect perhaps overshadowed — with the coincident push into a virtual reality (VR). That VR emphasis may have obscured Holodeck’s arguably great value to potential adopters: its ability to remotely and effectively co-develop a digital twin in a shared environment.

The Omniverse Architecture

Built on Pixar’s Universal Scene Description (USD), Omniverse provides a common, visual language to act as a standard baseline for all collaborators. The common foundation eliminates constant importing and exporting, with multiple users designing within the same model environment using applications running in real-time, live across the network.

USD’s concept of layers is a critical component for Omniverse’s core goal of concurrent collaboration by allowing multiple users to operate on the same model or sub-model. Each can work within a separate layer of the model, encapsulating different attributes that don’t necessarily impact the other layers. For example, imagine one graphic designer working on part material while another continues to model the shape or simulate lighting or airflow.

Supporting USD’s standard scene description on the materials side of the equation is NVIDIA’s Material Definition Language (MDL), an open standard that integrates physically based material descriptions into renderers, including Omniverse’s.

Omniverse’s common baseline visual computing environment allows for multiple contributors across networks with minimal bandwidth requirements (image source: NVIDIA).

The crux of Omniverse functionality lies in its primary platform components: Nucleus, Connect, Kit, Simulation, and RTX Renderer. Plug-in Connectors let individual user’s applications port to the Omniverse environment. Of particular interest in the CAD space, Omniverse currently offers plug-ins for 3DS Max, Blender, Maya, SketchUp and Revit, Substance Designer and Painter, with SOLIDWORKS support coming soon.

The end-user’s Omniverse Platform environment (image source: NVIDIA).

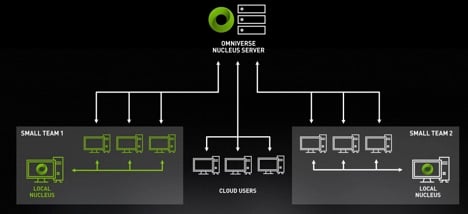

Since everyone’s operating on the same and only model, the only data transmitted over the network are design changes, which then are reflected in real-time among all collaborators. The Nucleus server is the traffic cop tasked with keeping all applications and collaborators in sync, by communicating the deltas, the information indicating what changed from each user’s view of the model’s previous state. Notably, Nucleus can operate both as the global coordinator in the cloud, and as a local manager on-premises for colocated sub-teams, or as both in a hierarchical fashion — where one office is developing all the assets for one component of the design. In the AEC world, imagine different teams working the building, with another focused on landscaping — each in their own offices collaborating within their domain while the master Omniverse Nucleus server synchronizes the building and landscaping into one database.

Omniverse Nucleus Server: the traffic cop maintaining database synchronization for all users, potentially in a hierarchical fashion (image source: NVIDIA).

Filling an obvious need for the CAD environment, Omniverse provides native simulation and rendering engines, including PhysX, RTX, and AI. PhysX provides physically accurate simulation for fluids, particles, ands waves. Common renderers are included, such as GPU-accelerated iRay. And while Omniverse and RTX are clearly forward-looking in the sense they’re framed around rendering as the default visualization — if not now, then eventually — Omniverse supports conventional 3D raster graphics as well, for example, Pixar’s OpenGL-based graphics engine.

While virtual and augmented reality views are not positioned as the crux of Omniverse’s proposition, NVIDIA supports both via CloudXR, providing VR views in to the environment as well as AR views out (that would then be processed locally to integrate the synthetic model into the real-world environment).

Omniverse Applications

In any design environment, the tools, flow, and comprehensive set of must-have needs is never a one-size-fits-all proposition. To both customize and build upon Omniverse’s base capabilities, NVIDIA includes Kit, exposing tools for developers to exploit, including Python and aforementioned engines such as PhysX and Nucleus, extensions for things like managing assets and textures. To address some of the more obvious needs for higher-level applications and help kickstart momentum for the Omniverse ecosystem evolution, NVIDIA stepped up to create and provide pre-packaged out-of-the-box applications for Omniverse users, including Create and View, both particularly relevant for CAD use.

Ready, Set, Go!

Once any core technological infrastructure is in place, the next critical step is getting it out to users. NVIDIA counts several big names among its list of early partners and adopters, for example Bentley Systems and BMW.

BMW. Think BMW and your CAD focus would justifiably jump to automotive design. But in this instance, the company’s use of Omniverse is just as relevant to AEC and manufacturing as product design. They are using the environment in conjunction with with Unreal Engine for pre-construction factory planning and optimization. The objective: to produce a digital twin of the factory floors, capable of simulating all stages of logistics, supply, and assembly. With that digital twin, BMW can rehearse all their manufacturing processes in the digital realm, optimizing how robots, humans, parts, and tools all interrelate in the physical world. The hope is to smooth out potential hiccups and find subtle optimizations that will pay off big before committing to construction and implementation.

Omniverse is an easy-to-use 3D environment for a wide range of users, many of whom are necessarily tech-savvy. In reality though,. BMW structured their Omniverse envronment around the Unreal Engine for real-time graphcs with additional connectors to Bentley Microstation, SOLIDWORKS, and CATIA.

BMW harnessing Omniverse to create, simulate and optimize its factory floors (image source: BMW).

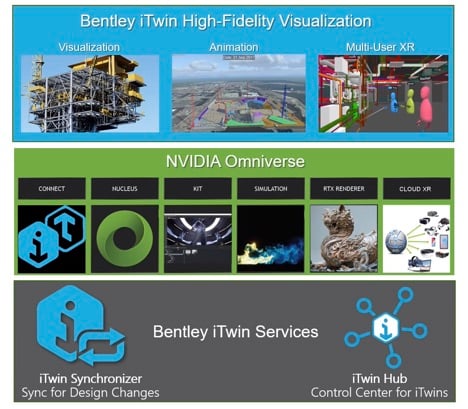

Bentley Systems is a long-time force in the world of AEC CAD, focused on the biggest public and private infrastructure projects. Its software is pervasive across the globe, used to design, evaluate, and simulate projects from airports to bridges, campuses, and water systems. Omniverse is a natural tool for Bentley to work with, as the philosophy behind Omniverse dovetailed with Bentley’s existing initiative to create an optimal digital twin computing environment, called iTwin. Bentley has integrated Omniverse into its iTwin environment, adding its own Omniverse applications for visualization, animation, and multi-user XR on top.

Omniverse a logical platform for integration with Bentley’s iTwin (image source: Bentley Systems).

If you were to consider the value of a digital twin in AEC, thoughts likely immediately focus on design of new structures. Build the structure and the project’s over. Fair enough. But for Bentley, ribbon-cutting on a new capital project might just be the start of its involvement.

Bentley is leveraging the digital twin concept further with Omniverse to help monitor, diagnose, and just as importantly predict infrastructure integrity and resilience over its lifetime. By maintaining that digital twin over the infrastructure lifetime — critically feeding back real-world image and sensor data back into the virtual realm — the digital twin can reveal problematic issues that may unseen in the physical structures and suggest preemptive intervention. For example, Bentley’s on-going work with a digital twin model of Singapore’s water system (or at least some portion) to detect problems potentially imminent in the physical world. Augmented by machine-learned AI on the lookout for possible problematic real-world data signatures and sequences, Bentley’s digital twin successfully flagged a possible water main break three days before it actually happened.

For Bentley Systems and its customers, an Omniverse-enabled digital twin with real-world feedback can offer as much value post-construction (image source: NVIDIA).

Is Omniverse a Game-Changer for CAD?

It’s an overused adjective, and often, in retrospect, some technology ends up falling short. But in some cases, “game changer” certainly applies. And at least conceptually, if Omniverse isn’t the ultimate game-changer, it’s a big step on the way there, particularly in the context of large-scale, geographically dispersed AEC and design applications.

There is always, of course, the not-so-trivial matter of transitioning from the conceptual to the practical, beyond beta programs and early adoption to mass acceptance. Yes, Omniverse is in use and is checking all the boxes it should be at this point. Its appeal is beyond question, and the solution appears comprehensive, particularly with NVIDIA in the driver’s seat. We look forward to seeing this technology reach full maturity with momentum that we can count on for the long term.

If you want to further explore or demo Omniverse, hop on over to NVIDIA’s Developer page for Omniverse to dig deeper and download the open Beta.

Click here for part two of our coverage of NVIDIA's GTC show! You can join the GTC After Party online and catch a variety of classes and technology briefs.

Alex Herrera

With more than 30 years of engineering, marketing, and management experience in the semiconductor industry, Alex Herrera is a consultant focusing on high-performance graphics and workstations. Author of frequent articles covering both the business and technology of graphics, he is also responsible for the Workstation Report series, published by Jon Peddie Research.

View All Articles

Share This Post