The pandemic didn’t stop Nvidia from convening its annual GPU Technology Conference (GTC) last spring. The conference went fully virtual and by all accounts proved successful, so much so that more than a few participants were left wondering why conferences like GTC didn’t take that path long ago. That sentiment, along with the realization of how much simpler it was to host GTC virtually, likely led Nvidia to double up on GTC this year, convening the virtual show once again this October.

Last spring, the company’s new Ampere-generation graphics processing unit (GPU) grabbed the bulk of the limelight. And among the priority pitches the company positioned for October’s session was the fruit of the company’s successful acquisition of Mellanox. A new breed of datacenter device designed to monitor, analyze, and secure network traffic called for a new name and acronym and NVIDIA obliged, welcoming the birth of the data processing unit (DPU). Not coincidentally, it’s also a device harnessing the technology of an even bigger acquisition the company is in the process of closing, Arm — the owner and licensor of the ubiquitous (outside of PCs) Arm processors.

But despite its attentions being pushed elsewhere, Nvidia managed to put out a new professional-caliber GPU, in the form of product called the RTX A6000 — the first of likely several Ampere-generation GPUs focused on professional visualization applications. First and foremost among those applications is CAD, and the RTX A6000 should deliver meaningful performance boosts for both the traditional 3D graphics use and emerging uses in rendering and compute acceleration.

RTX A6000, the First Professional Quadro GPU Built from Ampere

With Ampere unveiled and launched on the GeForce side of the house, it was only a matter of time before we’d see the first Ampere-based Quadro. In October of 2020, NVIDIA unveiled the RTX A6000 (with the A prefix standing for Ampere).

Typically, the first product based on a new generation appears at the upper end of the product line, and this was no exception. Raw specs on the A6000 track that of the company’s first and top-end gaming-focused GeForce RTX 3080/90 based on Ampere. All leverage the GA102 chip, the graphics-focused derivative of the flagship datacenter-oriented A100 launched at Ampere’s coming-out party last spring. NVIDIA maxed out memory on the RTX A6000, pairing the GA102 with 48 GB of GDDR6 memory, resulting in a card that comes in at a thermal design power, or TDP (the typically-not-to-exceed power) of 300 W.

The RTX A6000. Image source: Nvidia.

Expect to see the RTX A6000 in the channel by the end of 2020, and shipping in original equipment manufacturer (OEM) workstations in early 2021.

The end of the Quadro brand (at least for now). Interestingly, Nvidia at the last moment decided to drop the long-time Quadro brand, so we’re now supposed to call it the RTX A6000 for professionals, or something like that. More on the likely rationale behind the company’s decision to ditch the Quadro name ahead.

What Ampere Brings to CAD Professionals

In comparison with its direct predecessor, the previous-generation Turing-class Quadro RTX 6000, the RTX A6000’s raw specs suggest a rough doubling of maximum achievable performance. By populating more CUDA cores (the atomic processing element in the GPU’s array of cores responsible for executing the 3D graphics pipeline), the RTX A6000 can manage up to 39 TFLOPS (32-bit floating point operations per second), which is around 2X faster than Turing. These set an upper bound of twice the speed-up compared to Turing for 3D graphics — still the top priority for most CAD users — as well as for GPU-accelerated computation for uses ranging from engineering simulations like finite-element analysis (FEA) and computational fluid dynamics (CFD) as well as assisting on rendering.

Furthermore, those two uses — graphics and compute — more frequently occur in parallel in the most efficient workflows. So those additional cores don’t just enable a speed-up for each use, but allow users to run more GPU-intensive workloads in parallel. NVIDIA configured a commensurate doubling of graphics memory footprint, outfitting the RTX A6000 with an impressive 48 GB of GDDR6 memory. Granted, 48 GB is overkill for the majority of mainstream CAD projects primarily leaning on the GPU for graphics. But it absolutely can help for large-scale applications in automotive, aerospace, building information modeling (BIM) and geographic information systems (GIS), especially when leaning on the GPU for tasks like simulation and rendering.

Nvidia's new RTX A6000 for professionals offers twice the raw performance and memory of its predecessor. Data source: Nvidia.

As is virtually always the case in assessing raw hardware specs, remember that figures like the “2X” above represent the maximum end of what a user might see. It may or may not be a frequently realized speed-up, depending on the specific content and task. Often, especially when considering other possible hardware system bottlenecks (e.g., CPU, memory, and storage), 2X is more the exception than the rule. Still, a doubling of raw hardware resources from one generation to the next is an impressive step.

Furthermore, while twice the raw hardware resources provides at least an upper bound on the gains CAD pros might see running on an Ampere GPU tasked with conventional 3D graphics and compute acceleration, the performance story no longer ends there. When you stop and consider the novel new architectural features pioneered in Volta and carried forward in Turing, the performance of the GPU’s CUDA core array reveals only part of the full picture of Ampere’s merits.

The introduction of the Volta generation in 2017 marked an inflection point in the evolution of Nvidia’s GPU, both in terms of the architectural DNA and the recognition of non-graphics applications as a major market driver and product shaper moving forward. In Volta, Nvidia spent a substantial amount — in terms of development, but more significantly in terms of silicon area and therefore product cost — to integrate a multitude of new processing engines called Tensor Cores. Motivated to accelerate machine learning (ML) applications, Tensor Cores proved vital to improve the speed at which rendering could resolve images in the final stage of processing.

Volta eventually made its way to traditional workstation and CAD-focused GPUs, in the form of the derivative architecture Turing, spawning the current Quadro RTX 4000/5000/6000/8000 GPUs. In Turing, NVIDIA not only retained Tensor Cores, but piggybacked another engine on top: the RT Core, which explicitly accelerates the process of ray tracing, the performance-critical algorithm for the vast majority of rendering algorithms. In conjunction with the image-resolving acceleration of Tensor Cores, RT Cores enabled Turing-class GPUs to promise dramatically faster rendering, with obvious appeal not only in media-centric applications but CAD as well.

Circling back to Ampere, we see Nvidia not only proceeding down that path toward ubiquitous — or at least more pervasive — real-time rendering, but putting its foot firmly on the gas. With Ampere, the company increased RT Core and Tensor Core performance by up to twice that of Turing, resulting in computational horsepower above and beyond what’s delivered by the traditional CUDA core array. (For more on Tensor and RT Cores in Turing, and how they assist in accelerating rendering, check out this previous column: “With New Turing, Nvidia Doubles Down on the Future of Real-Time Ray-Tracing.”)

Nvidia's RTX A6000 features increased RT Core and Tensor Core performance by up to twice that of its Turing-based predecessors. Data source: NVIDIA.

NVIDIA’s First PCIe Gen 4 GPU

The first Nvidia GPU for professionals to support PCI Express 4.0 speeds (with twice the peak data transfer rate of Gen 3). Two notes to make on the jump to Gen 4: First, moving the workstation platform up to new standards of performance and compatibility is a bootstrapping process. That is, the initial enabling doesn’t typically deliver a dramatic performance boost on day one. Rather, it’s a process, one that requires (in this case) both GPU and platform to make the move, which then motivates applications and GPU drivers to make use of the extra I/O headroom. So no, for most CAD professionals using popular applications with at least reasonably mainstream model size and complexity, leveraging Gen 4 with all else equal probably won’t deliver a noticeable boost on day one. But remember, the forward advancement needs to start somewhere, and moving to Gen 4 will deliver dividends over time.

Second, looking beyond 3D graphics and instead at the case of emerging GPU applications — compute acceleration, data science, and machine learning — it’s more likely that a jump in I/O performance to Gen 4 may yield immediate performance boosts. It’s worth noting that as of this writing, only AMD’s CPUs are supporting Gen 4, currently in Ryzen and Threadripper SKUs. However, Intel’s upcoming 11th Gen Core family will make the move to Gen 4 as well.

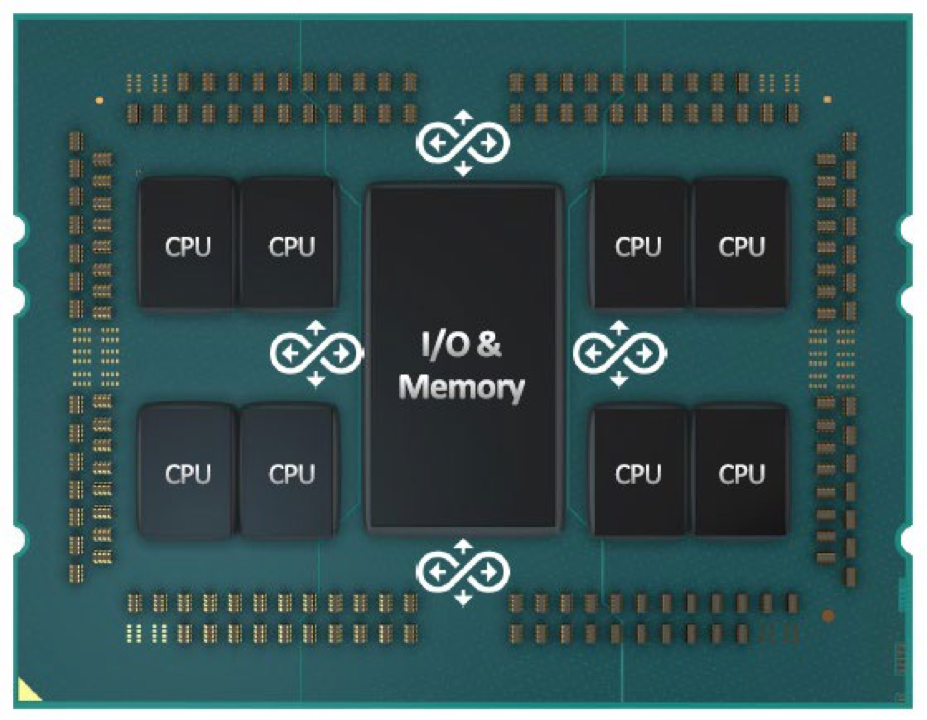

The Elastic GPU: 3rd Gen NVLink + Multi-Instance GPU (MiG)

Ampere-generation GPUs for professionals achieve more than performance gains for a single monolithic GPU. Two key scaling technologies allow Ampere — particularly in datacenter environments — to scale capabilities up and down for professional users. Borrowing an established term from the cloud, NVIDIA introduced Elastic GPU technology in Ampere. As with its cloud namesake, “elastic” refers to the Ampere’s ability to scale up by harnessing multiple GPU chips to software, seamlessly and transparently. Just as cloud and virtually abstracted desktops are presented as logical instances of a component, rather than the physical component itself, a logical Ampere GPU presented to software can be composed of a potentially large number of individual Ampere GPUs behind the curtain.

For example, the initial A100-based DGX A100 Ampere showcase computer comprises as many as 56 physical A100 GPUs, yet they are presented to the application as one monolithic GPU. Enabling the interconnect fabric is a new NVSwitch, with 36 ports running at 25 GB/s per direction, effectively doubling the numbers from the previous Volta/Turing generation.

Ampere leverages third-generation NVLink to seamlessly scale one physical GPU up to a datacenter-scale cluster. Image source: NVIDIA.

Elastic GPU drives up performance by aggregating multiple physical Ampere GPUs to create one bigger virtual GPU that appears monolithic to the application. That’s great for big-to-unbounded compute problems running in clouds and on-premises datacenters, like photorealistic rendering of complex scenes or ultra-fine-grained simulations. But Ampere supports the inverse as well with multi-instance GPU (MIG) technology, which breaks one physical GPU down into multiple virtual GPU instances (up to 7). Both MIG and NVLink allow compute resource providers (e.g., private and cloud) to more finely allocate GPU resources among clients and tasks. As such, Amazon Web Services, for example, could right-size clients to logical slices of a single physical Ampere GPU.

Ampere's multi-instance GPU (MIG) allows for as many as seven logical GPUs to be carved from one physical A100. Image source: NVIDIA.

Ampere-Generation GPU Exploits More Synergy Across Applications and Platforms

In conjunction with the RTX A6000 release came the A40 GPU, similar in functionality and leveraging Ampere silicon as well, but server-tailored for duty in GPU compute, remote desktop hosting (virtually or physically hosted), and machine learning. Expect to see more professional GPU introductions with these types of sibling pairs: one for desktop and laptop clients, and one for servers. With the growth in both GPU-enabled datacenter compute acceleration and the use of remote (virtual or otherwise) rackmounted datacenter-resident workstations, deploying new GPU technology in both forms serving the same base core of professional applications makes increasing sense.

This new reality also helps explain the aforementioned retirement of the Quadro branding. For more than two decades, NVIDIA has managed to ingrain Quadro in the market as the brand for GPUs targeting professional 3D visualization. But with both such products now enveloping far more than 3D visualization, in conjunction with deployment in a wider range of platforms beyond traditional client workstations, the company is clearly looking to decouple the old brand to reflect that evolution.

The datacenter-focused sibling: the Nvidia A40. Image source: Nvidia.

Dovetailing naturally with the announcement of the Ampere sibling GPUs was the push for enhancements in Nvidia’s Omniverse platform. Developed as an in-house collaboration tool, Omniverse enables remote collaboration and simulation in rich visual computing environments across multiple geographic sites and applications. CAD professionals across the spectrum will find Omniverse of interest, but perhaps most intrigued will be large-scale AEC business that are finding projects more fragmented across more contributors, incorporating staff that may be in another time zone or even on the opposite side of the globe.

To ensure all applications are speaking the same language over those portals, Omniverse employs Pixar’s Universal Scene Description technology to specify modeling, shading, animation, and lighting, while tapping NVIDIA’s own MDL (Material Definition Language) as a standard interchange of material parameters. The result? Contributors can develop different components of the same overall scene — or in the case of AEC or manufacturing, the same project — with each seeing the same representation on their individual screens, synchronized in real time.

Omniverse's common baseline visual computing environment allows for multiple contributors across networks with minimal bandwidth. Image source: NVIDIA.

Plug-in Omniverse Connectors provide the portal to bring in applications running live. Since everyone’s on the same baseline, the only things that get transmitted over the network during collaboration are the changes, which are then reflected in real time among all collaborators. Omniverse will enter beta this fall with connectors for 3DS Max, Blender, Maya, and Revit, among others.

The end user's Omniverse platform environment. Image source: Nvidia.

Omniverse takes advantage of Ampere’s multi-GPU scalability, such that users have another axis to loosen constraints on the typical quality vs. performance tradeoff. That is, typically a user will need to adjust down quality to hit a performance goal, or allow for slower performance to hit a quality goal. With scalable multi-GPU, Omniverse can instead harness more GPUs as necessary to hit top-end quality and performance goals.

CAD, BIM, and GIS are among the most compelling applications for a multi-site, data-compatible 3D development environment like Omniverse. Image source: Nvidia.

Pursuing the Ultimate Goal: Shrinking Design and Verification Time

By pushing on GPUs to more efficiently accelerate rendering and compute-oriented applications, technologies like Ampere and products like the RTX A4000 and A40 aren’t focused on simply speeding up your existing workflow. Rather, they hopefully open the door to expanding and evolving a workflow to allow more frequent and more detailed execution of valuable but compute-expensive workloads.

Consider typical CAD and BIM workflows, with work time dominated by the sequential and iterative modify/visualize/verify cycle. That verify portion can now integrate simulations earlier and more often — ditto for renderings — without waiting until later stages of workflow. Think of the tasks that you’ve probably felt pressured to ration in the past, simply because they take too long to exploit frequently. All else being equal, wouldn’t designers or stylists prefer to render more often to validate lighting or aesthetics? Likewise, wouldn’t engineers love to run CFD or FEA simulations more frequently, for longer duration periods, or to finer-grained detail? What about integrating those luxurious-but-valuable high-demand usage models into the modify-visualize-test cycle, rather than limiting them to once or twice at the end?

One supporter of that evolution is Tim Logan, the VP of Computational Applications Development at HKS, an international firm with more than 1,400 architects engaged in projects spread across multiple sites among their 24 locations in Europe, Asia, the Middle East, and the Americas. Supporting NVIDIA’s launch of the new Ampere GPUs, Logan stated, “With the NVDIA RTX A6000, we are getting closer to being able to run simulations in real time where we’re getting near-instantaneous feedback on design changes. This is bringing real building simulation down from days and weeks, to minutes or hours.”

Speeding complex lighting simulations like this one from HKS opens the door for not just quicker, but more frequent feedback design loop iterations. Image source: HKS.

Then expand that notion by considering the synergistic addition of Omniverse, simplifying the effort involved in remote, multi-contributor CAD-based projects — right up the alley of firms like HKS. And moving to a centralized cloud-resident repository for Omniverse-type project data opens the door for further benefits, like leveraging Ampere-based GPU scaling in the datacenter for tackling mega-scale jobs in the absolute minimum time.

Clearly illustrated by the emergence of GPU siblings the RTX A6000 and A40 — as well as the understandable retirement of the company’s venerable Quadro brand — the GPU has grown well beyond its role as a 3D graphics engine for client workstations. In that respect, the G (standing for graphics) in GPU might be worthy of retirement as well, because NVIDIA’s sights are far more holistic these days, looking at serving the entirety of professional users’ computing needs in the pursuit of what is truly the ultimate goal: a shorter path to a higher-quality end product.

Alex Herrera

With more than 30 years of engineering, marketing, and management experience in the semiconductor industry, Alex Herrera is a consultant focusing on high-performance graphics and workstations. Author of frequent articles covering both the business and technology of graphics, he is also responsible for the Workstation Report series, published by Jon Peddie Research.

View All Articles

Share This Post