The first Ampere-generation GPU (graphics processing unit) focused on professional 3D visualization has arrived. In October 2020, I introduced NVIDIA’s RTX A6000 and the company’s claims of significant steps forward in traditional 3D graphics and rendering, in addition to its computing acceleration.

Now that we have the product in hand, it’s possible to ascertain how those hardware-centric metrics translate to performance gains for a CAD user’s day-to-day workloads.

Figure 1. NVIDIA’s RTX A6000 GPU (graphics processing unit) brings new technology to the CAD and visualization world.

The RTX A6000 is most appropriate for a specialized set of CAD and visualization professionals mainly due to its $5,000 price range and because it uses two PCI Express slots and up to 300W of power. It may well fit the budgets and systems for those who specialize in the oil/gas, machine learning, and data analytics spaces, but most likely not the day-to-day CAD drafter.

While this first Ampere product is designed for high-end users, benchmarking the RTX A6000 should provide a meaningful indication of how future models will advance 3D visualization capabilities for the many. As Ampere technology trickles down the NVIDIA product line, similar gains in generation-to-generation performance should become available in more economical, mass-market GPUs that will apply to the bulk of the CAD hardware marketplace this year.

Ampere and RTX A6000 Refresh

NVIDIA’s new RTX A6000 offers around 2X the raw performance and memory as its predecessor—now measured across three hardware engines, not just one. As covered in more detail in last October’s column, with the introduction of Ampere’s predecessor Turing, NVIDIA reset the bar for GPU technology. Prior to Turing, GPUs had most overwhelmingly been measured by the ability of its shader architecture (supported by technology such as memory and input/output) to crank through conventional 3D graphics processing. But Turing’s introduction of both engines to specifically accelerate ray-tracing and machine learning — RT cores and Tensor cores, respectively. It’s worth emphasizing that those Tensor Cores also speed up the resolve and refinement stages in rendering, as I first covered in April 2018.

Think of Ampere as offering roughly double the raw processing capability of Turing. Averaging across those three engines, peak throughput gains sit right around 2X, complemented by a commensurate gain in graphics memory footprint. The RTX A6000 comes populated with more graphics memory than most workstation system memory, an impressive 48GB compared to the Quadro RTX 6000’s 24 GB.

Figure 2. NVIDIA’s RTX A6000 for professionals offers on average around 2X the raw performance and memory as its predecessor — now measured across three hardware engines, not just one.

Benchmarking the RTX A6000

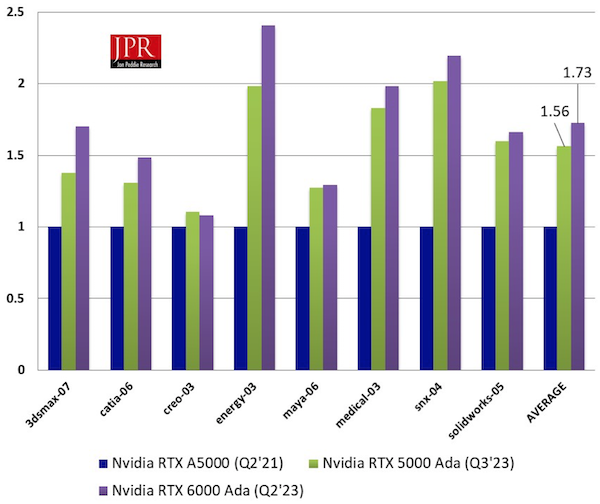

That overall hardware-limited 2X speed-up was as far as I could get on any quantitative analysis until now. With an RTX A6000 in hand, I kicked off some real-world testing with the usual starting point for professional visualization applications, SPEC’s SPECViewperf, in its latest SPECViewperf2020 version. SPECViewperf2020 runs through eight viewsets, 3D graphics scenes with rapidly changing viewpoints. Of those, several pulled from applications CATIA, 3ds Max, Creo, SolidWorks, and Siemens NX reflect typical visuals seen in manufacturing, design, engineering, and architecture.

Figure 3. A sampling of SPECViewperf2020’s CAD-oriented viewsets, including images from CATIA and SolidWorks.

Testing with SPECViewperf2020 yielded the following results, run on the same high-performance system, swapping in both the RTX A6000 as well as its predecessor the Quadro RTX 6000. The RTX A6000 system ran through the viewsets on average 15% faster than the Quadro RTX 6000, with individual speedups ranging from 2% up to 43%.

Figure 4. Running SPEC’s SPECViewperf2020 tests, showed that the RTX A6000 increased productivity from 2% to 43%.

It’s not easy to determine definitively, but I speculate that the most notable speed increase for the energy-03 viewset has more to do with the RTX A6000’s 2X larger graphics memory footprint than with its additional processing power.

What the RTX A6000 Does for Rendering

Just a few years ago and this performance-measuring exercise would be wrapped up. Test the aptitude of the GPU on 3D graphics workloads, in this case specifically geared to CAD-type visual workloads, and we’re done. But with the advent of ray-tracing hardware acceleration, pioneered by NVIDIA’s RTX technology, there’s more to the story. With the first two initials standing for ray tracing, in some form or another representing the foundation of virtually all rendering algorithms, RTX now represents as significant an axis of technological evolution and advancement as does traditional 3D graphics.

Remember two of those three engines—the RT Core and the Tensor Core—represent a significant investment with respect to research and development and the manufacturing of the silicon itself to accelerate rendering speed and machine learning.

How did that incremental investment pay off? To get some idea, I used a popular GPU-accelerated renderer, Otoy’s OctaneRender, that offers extended support to harness Ampere’s features. With content—albeit more relevant to digital media development than CAD—provided by NVIDIA (sourced from a partner), I rendered a scene of a varying number of heads (5, 6, and 11) on the same system, again swapping between the RTX A6000 and the Quadro RTX 6000.

Figure 5. To test the rendering speed benefits of NVIDIA’s RTX A6000, I rendered this 6-head digital image on Otoy’s OctaneRender comparing the RTX A6000 and the Quadro RTX 6000.

The testing yielded not only an indication of Ampere’s additional throughput, but also how GPU rendering is critically dependent on the size of attached graphics memory. The RTX A6000 rendered the 5-head model 2.5X faster than its predecessor, yielding a speed benefit far more consequential than what was delivered on conventional SPECViewperf2020 3D graphics viewsets.

|

|

Quadro RTX 6000 |

RTX A6000 |

Speedup factor |

5-head image |

36 |

14.0 |

2.57 |

6-head image |

Fail |

41.0 |

-- |

11-head image |

Fail |

107.0 |

-- |

NVIDIA’s RTX A6000’s rendering results relative to the Quadro RTX 6000.

How did the performance compare on the 6- and 11-head models? Well, here’s the second noteworthy result from testing: we don’t know, as they both failed to render on the Quadro RTX 6000. The hefty size of those models (the bytes add up quickly when you realize the scene has more than 100 million primitives) exceeded the space provided by the Quadro RTX 6000’s 24GB. For rendering highly complex scenes, both the performance and graphics memory size matter, and that’s why when that function is an absolute must-have, you won’t blink an eye at the $5,000 price tag. It’s worth noting here that GPU rendering under OctaneRender can be set to extend the GPU’s working set to system memory, such that larger scenes shouldn’t outright fail, but doing so can become problematic on other levels.

For a more detailed dive into the dramatic differences in 3D rendering versus 3D graphics approaches, check out this previous column covering the launch of RTX in NVIDIA’s Volta generation GPU, as well as this follow-on introducing RT cores in Turing, the successor to Volta and predecessor to Ampere.

Hardware-Based Motion Blur

Motion blur is a critical visual cue that tricks the human eye into perceiving motion from a static image. Creating motion blur is not a natural off-shoot of either the typical 3D graphics or rendering processes, instead it requires additional, time-consuming computation. With the introduction of Ampere, NVIDIA for the first time offers hardware-accelerated motion blur to enable the visual effect in a GPU-centric rendering pipeline.

Figure 6. NIVIDIA’s Ampere technology brings motion blur via hardware. Here is a scene, rendered with Ampere (bottom) and without (top) motion blur.

While motion blur and other graphics and other rendering techniques are now touted as “new” hardware-based tools, they’ve been available in software for a long time. There are also multi-pass techniques that can leverage GPU hardware acceleration, albeit slower and with potential artifacts. Even if rendering is a must-have in your CAD workflow, hardware motion blur may not be. I like to think that if one reason you passed on GPU rendering performance benefits was because effects such as blur were problematic, with Ampere technology there’s another reason to take the plunge.

A Few Conclusions

While the RTX A6000 is designed for high-end visualization, we wanted to ascertain how Ampere technology will help general CAD users better address their 3D visualization demands as it inevitably trickles down NVIDIA’s product line, especially as these demands increasingly encompass rendering in addition to tried-and-true interactive 3D graphics.

I reached a few basic conclusions after analyzing test results, a couple about the merits of the Ampere technology, and another on how Ampere reflects where we are going with 3D visualization in general. First, let’s again make the assumption that the RTX A6000’s speed increase over its predecessor will generally reflect increases in the Ampere products to come. If the speed increase does vary substantially, future mainstream Ampere GPUs will likely offer better generation-to-generation 3D graphics boost with a lower relative gain in rendering speed. That aside, from what we have with real world testing so far, it would appear reasonably to assert that Ampere’s gain—when processing today’s typical 3D graphics CAD content—may not make an upgrade from Turing-class RTX GPUs particularly compelling.

Two big caveats go along with that statement. One, we’re talking about today’s content, and the complexity of content tends to lag what mainstream hardware can effectively handle. That goes double for CAD and many professional spaces, where the detail of lighting and shading aren’t necessarily cutting edge. (Running modern gaming content, on the other hand, could very well show a much more substantial jump in performance moving from Turing to Ampere.) Still, CAD content will evolve to demand more—even if just in scene complexity rather than visual detail and effects, to the point that any performance gains will have value.

Second, and arguably more significant, is the fact that Ampere doubling down on RT Cores and Tensor Cores support the notion that rendering will continue to gradually grow in prominence over time. Rendering will always produce better images than 3D graphics. That is, if all were equal, there would be little reason to ever visualize with 3D graphics compared to true physically based rendering. Rendering specifically emulates the natural, physical properties of light and materials in a holistic, environment-wide manner. 3D graphics does to some degree, but its overriding goal is to provide visually appealing imagery in a way that can be processed in real time, or at least with minimal delay. By contrast, the adjectives “real time” or “quick” were until recently absent from discussions about rendering, a process where a second of Hollywood-quality imagery could take an hour to produce.

However, that’s been changing steadily over time, and NVIDIA’s investment in development and silicon with RTX technology has dramatically decreased the gap between rendering’s long wait or offline rates and real time. As such, use of rendering technology will only increase. Granted, there are many reasons why the transition from 3D graphics to rendering is a huge step, but the debate is about when, not if it will happen. Most CAD spaces already value rendering, particularly in AEC, automotive, aerospace, and product design. Exploiting rendering more frequently, earlier in the design cycle and with more detail reaps benefits, be they in bolstering clients’ buy-in, quality of the final product, or shrinking production time.

Once you accept that argument, it’s clear why it makes sense that NVIDIA developed Ampere to deliver 15% higher performance on legacy 3D graphics CAD content — and likely something more significant on more cutting-edge content — versus a robust 150% to render with photorealistic quality. Computer visualization is changing and it’s changing for our benefit. We are on the road to pervasive rendering, and Ampere technology represents one more substantial step in that direction.

Alex Herrera

With more than 30 years of engineering, marketing, and management experience in the semiconductor industry, Alex Herrera is a consultant focusing on high-performance graphics and workstations. Author of frequent articles covering both the business and technology of graphics, he is also responsible for the Workstation Report series, published by Jon Peddie Research.

View All Articles

Share This Post